In a previous article, I explained the three phases of PP.io, “Strong Center”, “Weak Center”, “Decenter”. Why should I gradually implement PP.io decentralized storage network in three steps:

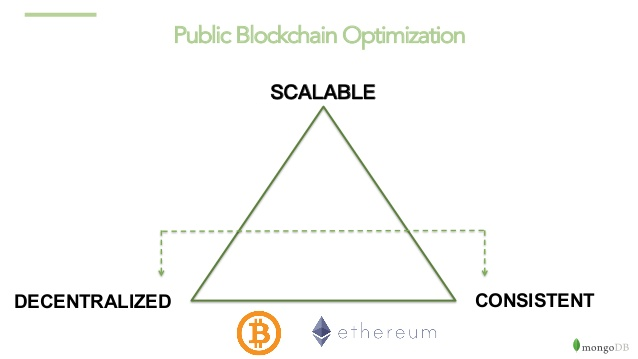

Simply put, in blockchain impossible triangle, I temporarily gave up decentralization for scalability and consistency.

Let me first explain what impossible triangle theory is. That is to say, these three points, Scalable, Decentralized & Consistent, cannot be selected all together. Only two of them can be selected at most. Bitcoin and Ethereum sacrificed scalability because they did it as an encrypted digital currency.

When I was designing PP.io, I sacrificed decentralization for the real needs of the scene.

What is my detailed thought process? The following three aspects are what I mainly considered.

The very complicated proof mechanism

The proof mechanisms of cryptocurrency, such as Bitcoin and Ethereum, are very simple. They are doing math problems and guessing numbers based on an integer. Everyone guesses a number, trying to find a number with the most consecutive zeros in the beginning, that also complies to the rules of the game. Whoever guesses this number first wins, so he gets the reward from the new block.

As you can see,the algorithm is very simple. Because of its simplicity, this algorithm is mathematically very rigorous. As long as this calculation process is mathematically impossible to calculate backward, it is very safe and has no loopholes. Because this is only a mathematical game, the algorithm is, of course, simple. However, this mathematical calculation is meaningless: the more people bitcoin mines, the more human resources are being consumed.

One of the great missions of the decentralized storage public chain is to turn bitcoin, a wasteful resource mining method, into a service that is beneficial to humans. I also followed this methodology when I was designing PP.io. When it comes to how to measure the services provided, the most basic process is to use the storage unit and the flow unit to measure. Traditional cloud services are also measured by these two factors.

What is storage? Storage is how big stored content is and how much time it needs to be stored. This is a measurement factor. What is traffic? Traffic is how many bytes are transferred, which is also a measured factor. Proving these two factors cannot be done by a single-machine algorithm alone. It is necessary to use network communication, so that the third party node can witness the two sides to prove that it is useful. However, if this third-party node is dishonest, more third-party nodes are needed to witness it together. Consensus must be reached among these witnesses to complete the proof. This process is much more complicated than the stand-alone algorithm of Bitcoin.

Another point is that each time Bitcoin generates a block, only one node can get rewards because it is the first to do the math problem right. So it’s very simple. However, decentralized storage systems should reward for contributions based on all nodes that have served during this time. It’s much more complicated than Bitcoin. (Maybe you mined Bitcoin, mistakenly thinking that Bitcoin based on computing power to distribute rewards. Actually not, because Bitcoin introduces a centralized node called the mine pool. The mine pool gets the bits obtained by very few nodes. The mine pool is equivalent to insurance; it takes the original only a few nodes got Bitcoin rewards for all those involved in mining.)

So the proof mechanism of decentralized storage is much more difficult than the proof mechanism of Bitcoin. How much more difficult is it? As an example, Filecoin, which is developed by the IPFS team, released a white paper in mid-2017. The white paper mentions the PoSt algorithm and the PoRep algorithm, accounting for 80% of the entire white paper. In the fall of 2018, the IPFS team published a 50-page paper on PoRep. This shows how complicated the PoRep algorithm is. Filecoin wants to directly achieve the ultimate goal of decentralization. It is now approaching the end of 2018 however, and apart from two papers and several demo videos, there is no other substantive information. They are said to be a team of scientists. I believe they must have encountered a considerable challenge.

When designing PP.io, I took into consideration that in an entirely decentralized environment, all nodes can do evil. Under the premise that all nodes may be evil, developing any mechanism, especially the proof mechanism, will be very complicated . The more complex the proof of mechanism, the more vulnerabilities there are. However, if you initially give up on having an entirely untrustworthy environment and instead choose to make some of the characters credible, then only the miners who provide storage can do evil, and the whole algorithm will be much simpler.

When I was designing the “strong center” phase of PP.io, I ensured that the Indexer Node, the Verifier Node and the settlement center were completed by the centralized service. Only the storage miners were decentralized and able to do evil. At this phase, we first implemented the proof mechanism related to the storage miners , and could go ahead and run the entire system. Other factors were more critical during this time, such as performance, Qos, economic models, etc.

Then, in the “weak center” phase, the previously centralized service became a node that could be deployed separately. Index Nodes and Verifier Nodes can allow for authorized deployment, but must be constrained by offline commercial terms and guaranteed not to be done afterwards. During this time, we did the technical proof mechanisms for these authorized nodes. When we have perfected both our engineering and our mathematics, we can open the barrier to entry and move towards complete decentralization.

Iteration and optimization of quality of service (QoS)

I introduced the importance of quality of service (Qos) in a previous article, see https://medium.com/@omnigeeker/what-is-the-qos-of-decentralized-storage-9d330457f390

Let me first tell the story of the technology I did during the PPTV startup process and how did we do Qos then. At the time, I had been doing P2P technology for ten years, having started in 2004. Throughout those ten years, I have experienced P2P live broadcast, P2P VOD, and P2P technology on embedded devices. Among them, with P2P live Qos, I made it possible that when millions of people watched the same program online, the average time to start playing was 1.2 seconds, the average count of interruption was 1.6 seconds per half an hour and the whole network latest delays from broadcast source was up to 90 seconds. With the VOD Qos, I achieved 90% bandwidth saving ratio; the average time to start playing was 1.5 seconds, the average count of interruption was 2.2 seconds per half an hour, and the average time to play when seeking position was 0.9 seconds.

We achieved such an outstanding Qos, and it provided a rock-solid foundation for us to reach 500 million users worldwide.

We achieved such excellent results at that time, not at the beginning, but after a lengthy process of day-by-day optimization and numerous versions of the upgrade iteration. In this process, we refined at least 100 QoS indicators, established a big data analysis system, and split different regions of different countries to optimize them one by one. We also developed an AB testing mechanism. A networks are for most users, as they use a stable kernel. B networks are for a small number of voluntary users, as they use the latest kernel. We quickly evaluate the effectiveness of the algorithm for QoS. The B network will frequently upgrade the P2P core until it is determined that the QoS of the new network kernel is better and very stable. After completion, we will fully upgrade the A network kernel for all users, which will allow most users to have the best user experience.

However, if we are in a completely decentralized environment, the upgrade needs the consensus of most nodes, like Bitcoin and Ethereum. Bitcoin upgrades have experienced many soft forks and hard forks, and large upgrades have been going on for a long time. Such product upgrade efficiency is very unfavorable for doing Qos. Good QoS is iterative, not done at one time. In the early stages of the project, if going directly to a decentralized upgrade, the cost of the upgrade will be extraordinarily high and the iteration cycle will be prolonged.

A well-made QoS is a product, but a poorly-made QoS is a toy. Currently, there are some so-called internationally renowned public chain projects, they tell very good stories and their “religious followers” are also many, but in my opinion, these are toys, because these are very difficult to use.

In contrast, when I designed PP.io,I chose “strong center” from the beginning, so that QoS could be adjusted very efficiently. Because of the good QoS, more users will come in and use it, which mean that more data is stored. With more data, it will attract more miners and form a positive cycle. Only when QoS has been successfully implemented, will we move on to decentralization.At this point, because QoS is better, and there are more users, public trust will be also be more and more critical.

Economic model

When I designed PP.io, I designed an incentive mechanism. According to this economic incentive model, miners can benefit from providing services on PP.io The quality of the economic model directly determines the success or failure of the project.

Although the economic mechanism looks very simple, the actual operation is very complicated. For example, the security problem of decentralized storage mentioned above also demonstrates the impossible triangle theory. The mathematical proof of heavyweight alone cannot completely solve the problem of node evil; it also depends on economic punishment.

First of all, let me ask you a couple questions and see what you think:

Do you think miners should have a mortgage as a prerequisite to being able to mine?

The argument against it: Miners can mine without mortgage. If the miner must have a mortgage, the miner’s entry threshold will be very high.

The argument for it: Miners must have a mortgage in order to mine. Because of the mortgage, he can be punished when he does not follow the rules, thereby ensuring the stability of the miners. If the miners are free to go online and offline at will, then the P2P network will be very unstable, which will lower the stability of the entire service.

My second question is, if the miner suddenly goes offline, but not deliberately, such as in the case of a power outage, should it be punished?

The argument against it: It should not be punished. The algorithm should be designed with a fault-tolerant mechanism which allows the miners to go offline occasionally. As long as the miners are not doing evil, they should be punished.

The argument for it: In the computer program, it is impossible to identify whether the miner is deliberate, so the design incentives must be consistent. As long as it is offline, it should be punished, so that eliminate low-quality miners can be eliminated and high-quality miners retained. The better the miners, the better the quality of service across the network.

What is your opinion on these two questions? These two issues are primarily economic issues. No matter what you choose, you will have unpredictable results. The study of economics tells us that you hope for is not necessarily what you get. Economics is a discipline that specializes in researching the science behind things not going according to what was hoped for, or, as the saying goes, why all his swans are turned to geese.

When I designed PP.io, how did I solve this problem? First of all, I established an economic incentive plan. Of course, this initial plan was not decided by randomly racking my brain to come up with something, but rather it was the result of implementing a whole series of data modeling and conditional assumptions. Later I will make the source code for the economic modeling program accessible in the official Github of PP.io.

The factors impacting all aspects of the economic model are very complex. If you start with a decentralized approach, there will be problems that make upgrading very difficult. The miners are likely to refuse to upgrade, because they will not benefit from doing so, just as the Bitmain does not care about the bitcoin core team initiating the BCH hard fork.

When I was designing PP.io, I realized that a good economic mechanism would be critical. Good economic model is the result of trial and error, as many adjustments are made along the way . Premature decentralization, therefore, is not conducive to the adjustment of the economic model. This explains why adoption of the “strong center” in the beginning is more conducive to the adjustment of the economic model. As the economic model gradually becomes stable and reasonable, PP.io will move progressively toward decentralization.

The above three reasons are why I chose to progress through the three phases of “strong center”, “weak center” and “decenter” when designing PP.io.