PPIO is a decentralized data storage and delivery platform for developers that value affordability, speed, and privacy. We have written several articles to explain the data storage functions of PPIO, and this article will help you understand PPIO from the perspective of data delivery.

What Is Content Delivery?

Content delivery refers to the method of quickly delivering the same piece of data to multiple people while ensuring the transmission quality and user experience. Content can be delivered to people located in many places within a specific area, including an entire country.

Typical application scenarios that involve data delivery include static web pages, large file downloads, large image viewing, streaming media on demand, streaming live broadcasts, etc. Some commercial scenarios, such as multi-channel video calls, video conferencing, etc., are known as two-way data delivery.

Key Technologies and Scenario Applications for Content Delivery

1. CDN & P2P

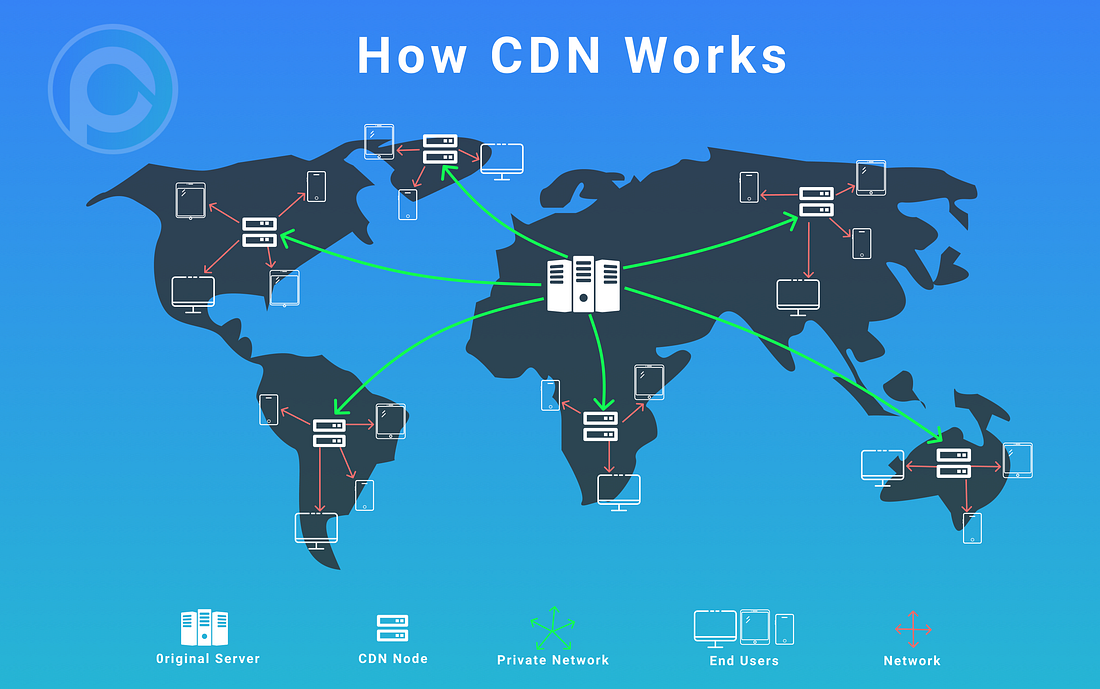

The traditional delivery technique is to use CDN, a ‘content delivery network’ built on the network. The basic principle of this technology is to push data from the source station to the server closest to the user. By directly obtaining data from the server closest to him, the user can have the best user experience possible. CDNs rely on edge servers being deployed in various places and will implement load balancing, content delivery, scheduling and other functional modules of a central platform to help reduce network congestion and to improve user access response speed and hit rate. Content storage and delivery are two of the main technological functions of CDN.

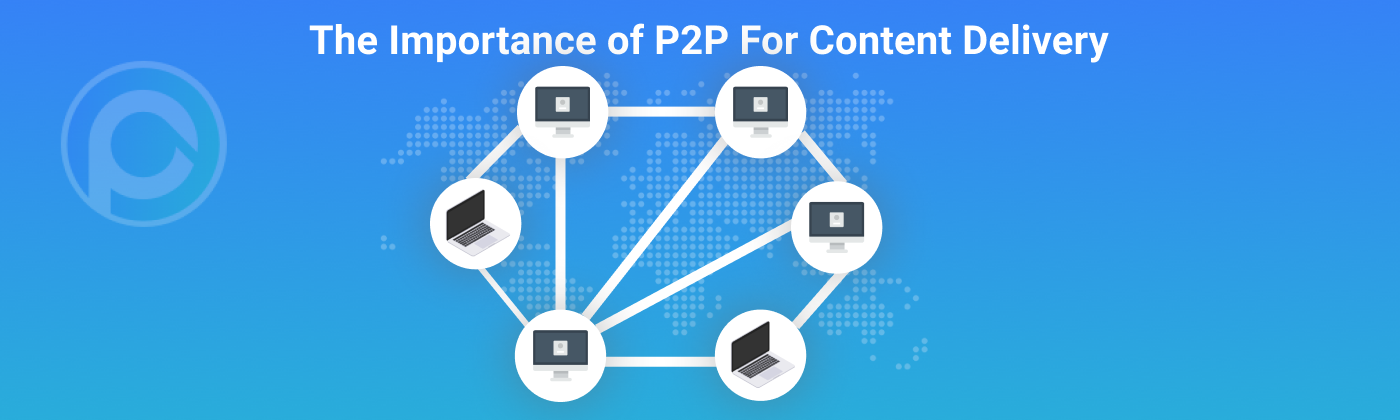

Content delivery is the earliest application of P2P technology. From Napster to eDonkey and BitTorrent, P2P networks transmit the same piece of content that people want to access. The more people that use the same content, the more nodes will upload the content and the faster the download speed will be. This is a typical content delivery scenario.

Although both P2P and CDN are content delivery technologies, they are implemented differently. In CDN, every node is a server. The CDN network eventually forms a tree structure, which delivers data by level. On the other hand, every client in the P2P network can upload content. This means that while the client is downloading the data, it is also uploading data to other clients. In an ideal world, the P2P network is a reciprocal ecosystem where everyone serves me, and I serve everyone.

P2P has several advantages over CDN.

- P2P is a multi-point download network that allows you to take full advantage of your network and download faster. When there is network distance from the server, P2P enjoys a higher speed than CDNs.

- P2P’s multi-point download mode won’t cause fluctuations of the overall download speed in the same way that a single-node may produce when downloading and reading data.

- P2P saves bandwidth of the resource publisher.

The disadvantages of P2P versus CDN.

- The implementation of P2P is more complicated than that of CDN. If we need to adjust the algorithm for business changes, P2P is substantially more challenging to change than CDN.

- Because the quality of the P2P network requires the participation of high-quality nodes, it is less easy for a P2P project to start running than a CDN project. For a business that wants a quick start, CDN is a better option than P2P.

- For ISPs, it is harder to control P2P than CDN. ISPs typically compensate this by limiting the use of P2P which leads to unsatisfactory user experience.

But P2P and CDN are not mutually exclusive. A combination of both is P2SP technology which allows the client to download from the CDN node and P2P network. A service built using P2SP is also known as a PCDN service.

2. Video Applications Are Common Usage Of Delivery Technology

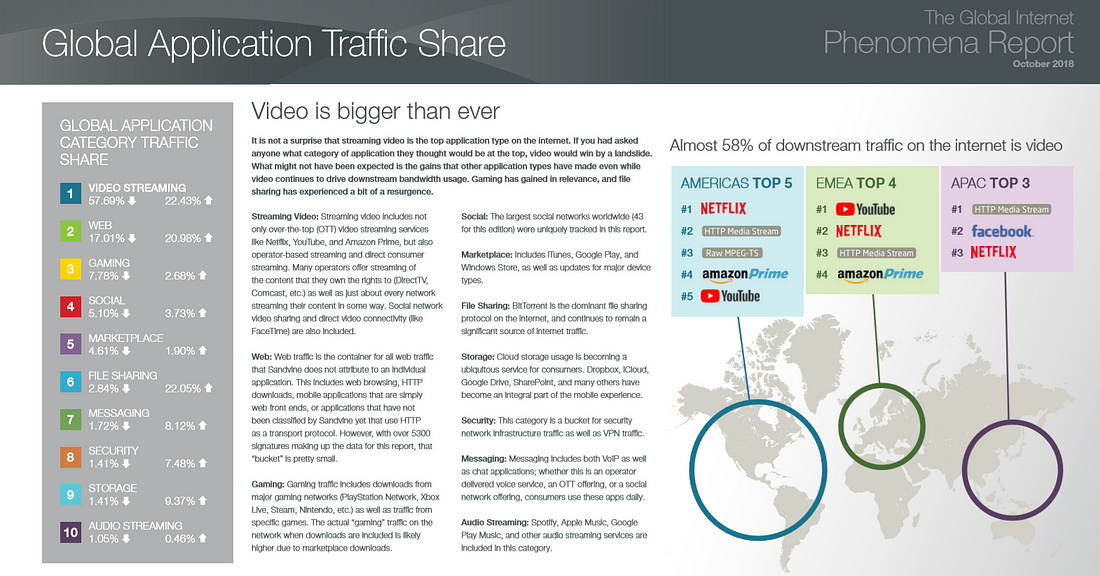

Content delivery applications are heavy-duty media applications. Video-On-Demand apps like YouTube and Netflix, live video service like Hulu, and short video platform like TikTok are all content delivery applications. A report from October 2018 from Sandvine stated that video applications accounted for about 58% of Internet traffic downloads. We will also focus on improving the QoS of the network for video streaming service when designing the PPIO platform.

3. Content Delivery Is Inseparable From Storage

Data storage and delivery are all about reading and using data. They are bound together and cannot be separated. However, they both differ in the number of users. If only one person reads and uses data in a network, it is data storage. When many people read or use the data, it is content delivery. When it comes to execution, both require a different network design and different levels of QoS.

PPIO’s Technology Design For Content Distribution

At PPIO, we are not only aware of the complex business and technology needs for the content delivery application, but we also have rich experience in designing and operating a successful P2P video streaming product. PPIO’s core team is the founding team of PPTV, the largest P2SP Chinese video platform. PPTV acquired over 450 million users. Our experience with P2P video products in the past decade has enabled our team to accumulate rich knowledge and practices of P2P delivery projects in terms of technology, products, and operational practices. These experiences allow us to design the technical architecture that meets the actual needs of delivery products. The following is PPIO’s technical design for the content delivery scenario.

- Overlay Network

PPIO supports an overlay network. Each storage node (miner) will take the storage node with the faster physical connection as its neighbor. Therefore, in the process of data transmission and information interaction, every node can take full advantage of its neighbor nodes to significantly improve network efficiency.

2. Optimization of Media Streaming Transmission

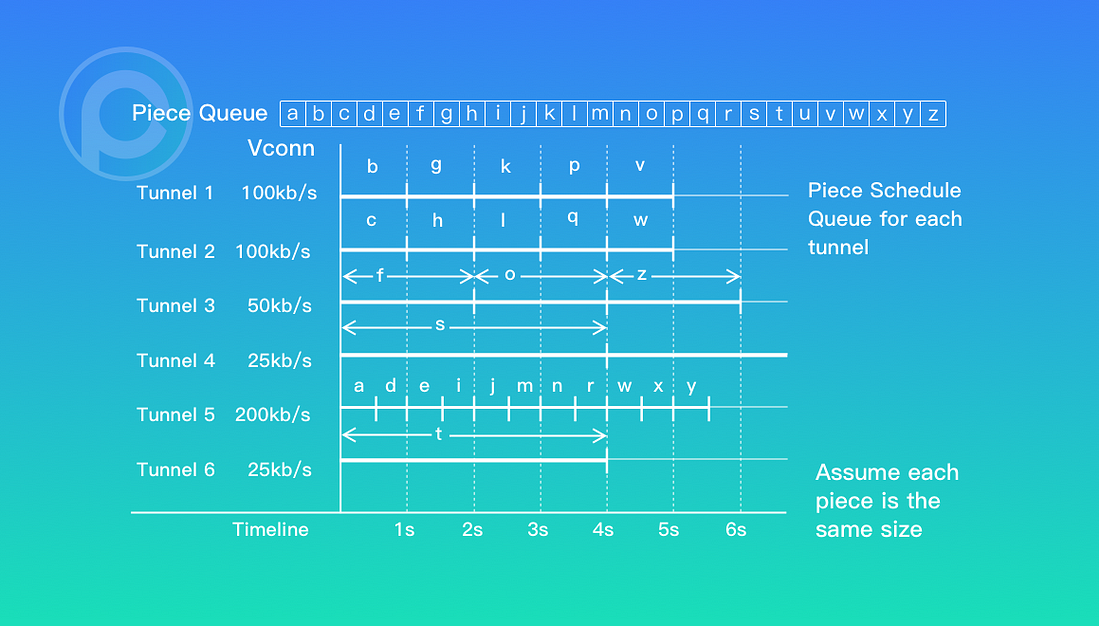

PPIO implements a special data-driven download algorithm for media streaming to ensure smooth playback of live streaming media.

3. P4P Technology

P2P will generate a large amount of cross-ISP traffic between networks. There is no additional charge for the traffic within the network of an ISP, but ISPs usually charge extra for the traffic generated by the transmission between different operators. Is there any way to maintain the advantages of P2P technology while reducing cross-ISP traffic? Yes, with P4P technology.

P4P (Proactive Network Provider Participation for P2P) is a method for ISPs and for P2P software to optimize connections. It enables peer selection based on the topology of the physical network and reduces traffic on the backbone network and the operation costs of network providers to improve data transfer efficiency. Compared to P2P that selects nodes randomly, P4P mode can coordinate network topology data and select nodes effectively, thus improving network routing efficiency.

The PPIO team has had a rich experience dealing with operators when developing PPTV; therefore we are entirely able to implement ISP-friendly P4P technology in PPIO’s design.

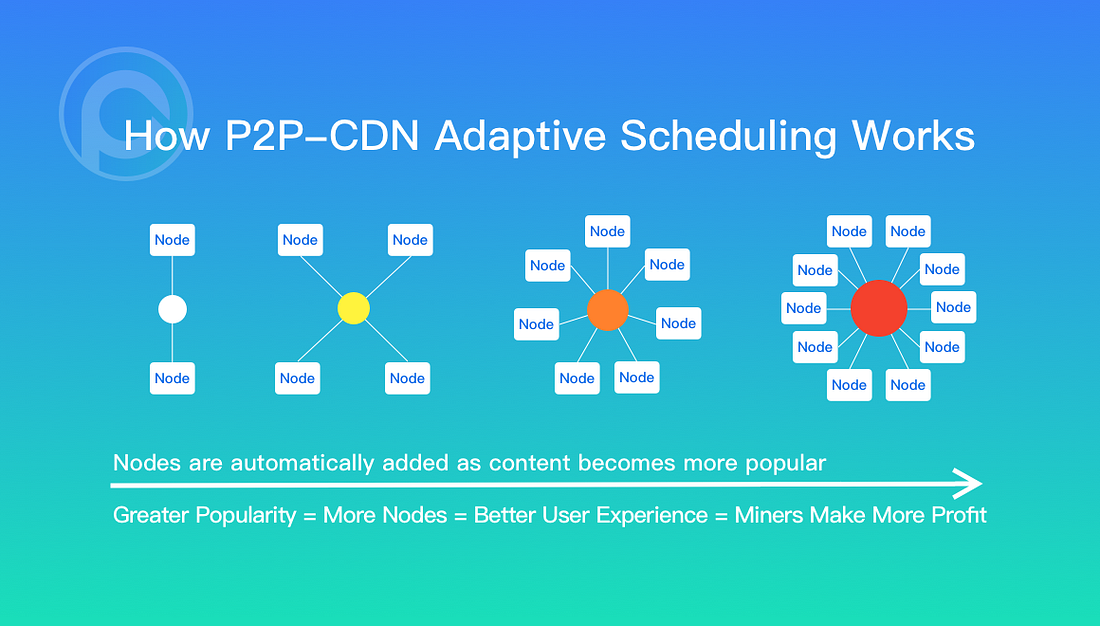

4. Adaptive Scheduling of Popular Content

PPIO supports P2P-CDN. In P2P-CDN, adaptive scheduling of popular content is an essential means to improve the quality of service. Adaptive scheduling ensures that content is scheduled to more and more storage nodes as it becomes popular. The more nodes hosting the content, the better the user experience and the more nodes that make a profit. Conversely, when the file is not popular anymore, the network will adaptively reduce the copies. Thus, the network forms a dynamic balance. PPIO continues to put in an effort to design and improve this algorithm.

5. Pre-Delivery Mechanism

Besides adaptive scheduling of popular content, PPIO also provides a pre-delivery mechanism to increase the user experience. What is the pre-delivery mechanism used for?

Take a TV series as an example. If there are already a lot of people watching Game of Throne episode 7, then it’s highly possible that there will be a lot of people watching the next episode. Therefore, when the publisher updates a new episode, they can push the resources of the latest episode in advance to more miners. So even before you start watching the next episode, there are already enough storage nodes providing the resources so you can access that content. This pre-delivery design entirely takes advantage of the P2P network and can significantly enhance the viewing experience. There are many more scenarios where the pre-delivery mechanism can be used if publishers can predict which content is needed.

Content publishers can pay extra for pre-delivery service for specific content. They can specify the areas, ISPs, and time period for the pre-delivery. The price of the pre-delivery storage will vary depending on the regions, ISPs, and the time of day. The implementation of pre-delivery in PPIO is basically the same as the principle of decentralized storage: miners don’t know whether the content is popular or not; therefore they risk the opportunity to make more money by hosting content that does not become popular. The differences between pre-delivery storage and on-demand storage are that in the former, storage nodes save the full copy of the pre-delivered content, while in the latter, storage nodes mainly depend on the erasure code. The differences between full copy and erasure code are explained in the later part of this article.

6. Design for P2P Living Streaming

The PPIO platform not only considers the download of streaming media on demand but also includes live streaming services. Live broadcast is essentially the delivery of a series of small files. These small files have a shorter life cycle and are useless after a certain period of time. Despite their size, these files do have a high requirement for efficient delivery. For example, small files need to be delivered very quickly to as many nodes as possible. The overall structure of a live broadcast is consistent with the streaming media system of PPIO. It is worth noting though that the way to split the files and the download algorithm is different.

Live broadcasting is divided into two categories. One is a high-latency live broadcast. It is mainly used for events, news, etc. This type of live broadcast might be a live channel where many users are watching at the same time. These users are typically not so sensitive to the delay of the program. The other category is a low-latency live broadcast, which is mainly used for streamers and live shows. The feature of this live broadcast is the instant interaction between the anchor and the user, which then requires a low latency that is usually within 5 seconds. This type of live broadcast has a smaller audience compared to the high-latency live broadcast group.

To support both types of live broadcasting scenarios, the PPIO platform is designed to be compatible with three P2P implementations: push data to subscriber nodes, pull data from other nodes, and request data from source nodes. Our experience of designing the largest P2P live streaming platform and other live broadcasting products proves that the combination of these three techniques can primarily increase the QoS for a streaming and broadcasting platform.

7. PPIO’s PCDN Design

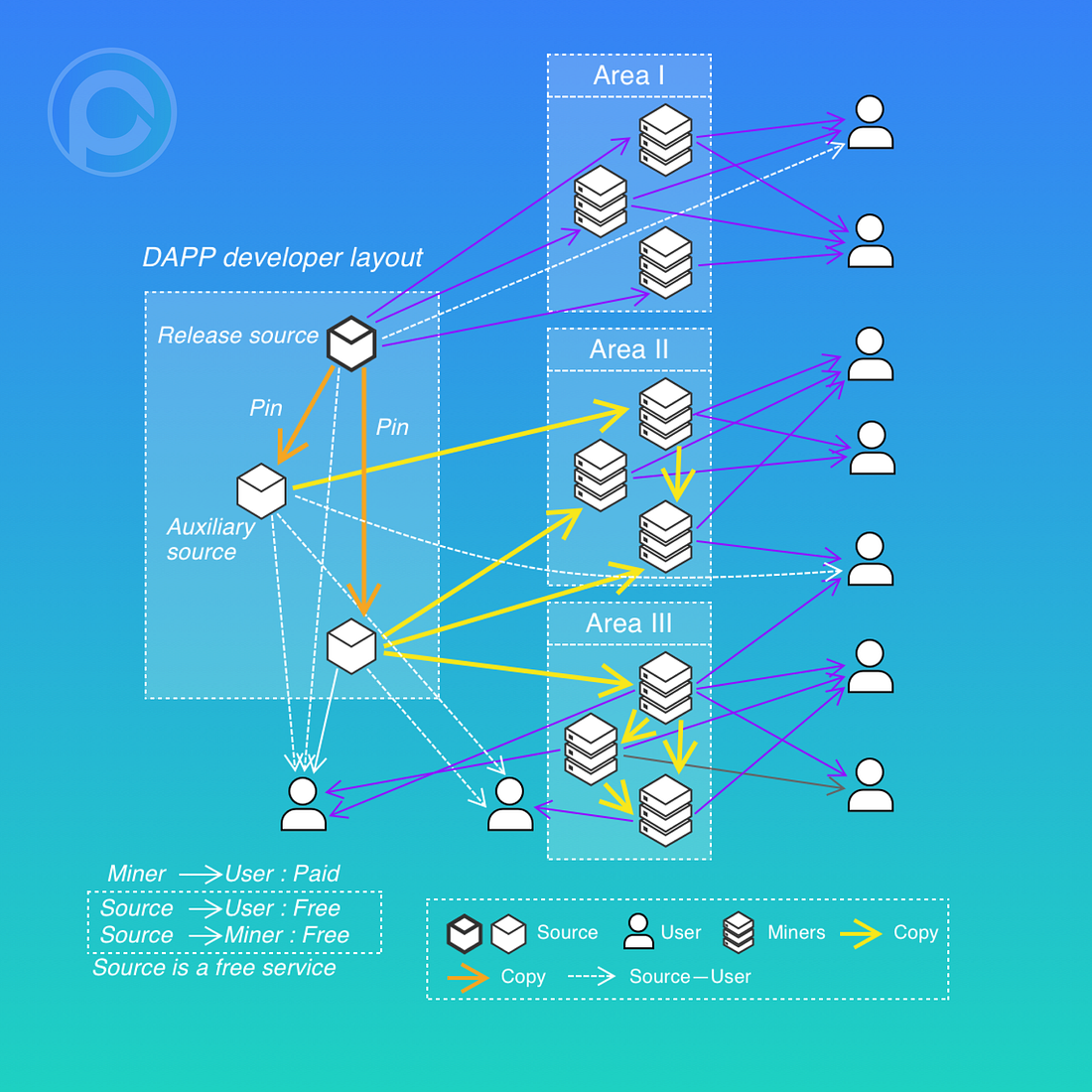

PCDN utilizes the abundant bandwidth and storage resources of miners in the P2P network to achieve faster data delivery. PPIO is designed to support PCDN and to provide an easy-to-use interface for DApps to accelerate their content delivery.

The content is first published on the source node before being delivered. If the source node is online, the user can download from it. However, as the number of users downloading from the same source node increases, the node’s available bandwidth gets exhausted quickly, and the downloads will slow down. With PCDN, when other miner nodes store and provide download services for the same piece of content, users will be able to download from multiple peers in the network and enjoy a much better experience.

There are two ways for applications to implement PCDN in PPIO.

- To take advantage of content scheduling as previously described. As PPIO embeds optimized scheduling of popular content in its overlay network, miners will proactively download and store this data to provide download services. As a result, data gets copied and distributed across the network where the data is deemed popular. It improves the download experience for users as the number of copies available increases

- To enable and configure PCDN directly. PPIO provides a set of APIs to allow DApps to set up PCDN for their content. The applications can specify the number of copies to be maintained in specific parts of the network, or specific geographic locations regarding the country, ISP, state, and city, as defined in the P4P database. PPIO will find the miner nodes in the specified area to store content and provide download services. As the application specifies the scheduling, it needs to compensate the miners’ cost in storage spacetime, scheduling, and conducting storage proofs. The image above shows the PCDN-driven data flow in the network in this case.

What Are the Differences Between PPIO’s Delivery and Storage Design?

Data storage and delivery have different technology requirements. We’ll elaborate as the following:

- Full Copy vs. Erasure Code

The ultimate goal of data delivery and data storage is different. Delivery is about how to get content quickly. In a common delivery scenario, the source node is always there, so we don’t have to worry about data loss. Even if the storage node accidentally loses some data, we can still request the same data from the source node. Therefore, in the data delivery scenario, we have decided to implement a full copy algorithm.

But the main goal of the data storage is to ensure the safety of data; that is, to prevent data from being lost and to reduce the loss rate. If we use a full copy algorithm, we must replicate a large number of data copies to achieve a 99.999999999% no loss rate. Erasure code, on the other hand, can produce a no loss rate at the level we want with minimum redundancy space. Based on this, we have come to think that the erasure code is a better solution for data storage.

To summarize, data delivery pursues high-speed transmission in which a full copy can better fit our goal. Data storage must have a 99.999999999% no loss rate, so the application of erasure code technology is the better choice.

2. Memory Cache

Another big difference between data delivery and data storage is that data delivery seems to follow the phenomenal Pareto principle, whereas data storage does not.

When it comes to data delivery, the Pareto principle, also known as the 80/20 rule, refers to the fact that 20% of network content attracts 80% of the traffic. And the top 20% of network content also follows the 80/20 rule. Therefore, we can typically divide the delivered content into three groups: primary content, secondary content, and tail content. Primary content concentrates the most traffic, secondary content attracts less traffic, and the traffic at the tail end is scattered.

Categorizing the popularity of content helps us choose the appropriate storage nodes to reduce the storage cost. The memory cache fits the storage needs for primary and secondary content. Secondary and tail content require high-speed storage media such as SSDs. Tail content only requires a mechanical hard disk when we consider the cost.

On the contrary, data storage does not follow the Pareto principle. All content is tail content because very few people store the same content. Data storage can also be categorized into three groups: hot storage, warm storage, and cold storage. Hot storage means that data is often read after being written. Warm storage means that data is rarely read after being written and may not be read ever again. An example of this might be of old data on a private network disk that is rarely read. Cold storage means the data is seldom used after writing and doesn’t need instant access, e.g., video monitor data.

In the PPIO network, the full copy and the erasure code algorithms are designed to co-exist for hot storage scenarios. The full copy guarantees a proper high-speed transmission and the erasure code reduces the data loss rate to a very low level. It is recommended for the nodes carrying the hot storage to use a high-speed hard disk such as an SSD. Meanwhile, warm and cold storage may use only the erasure code algorithm. We recommend using a hard drive to store warm and cold content to minimize costs for both these types of content as they are rarely read and written.

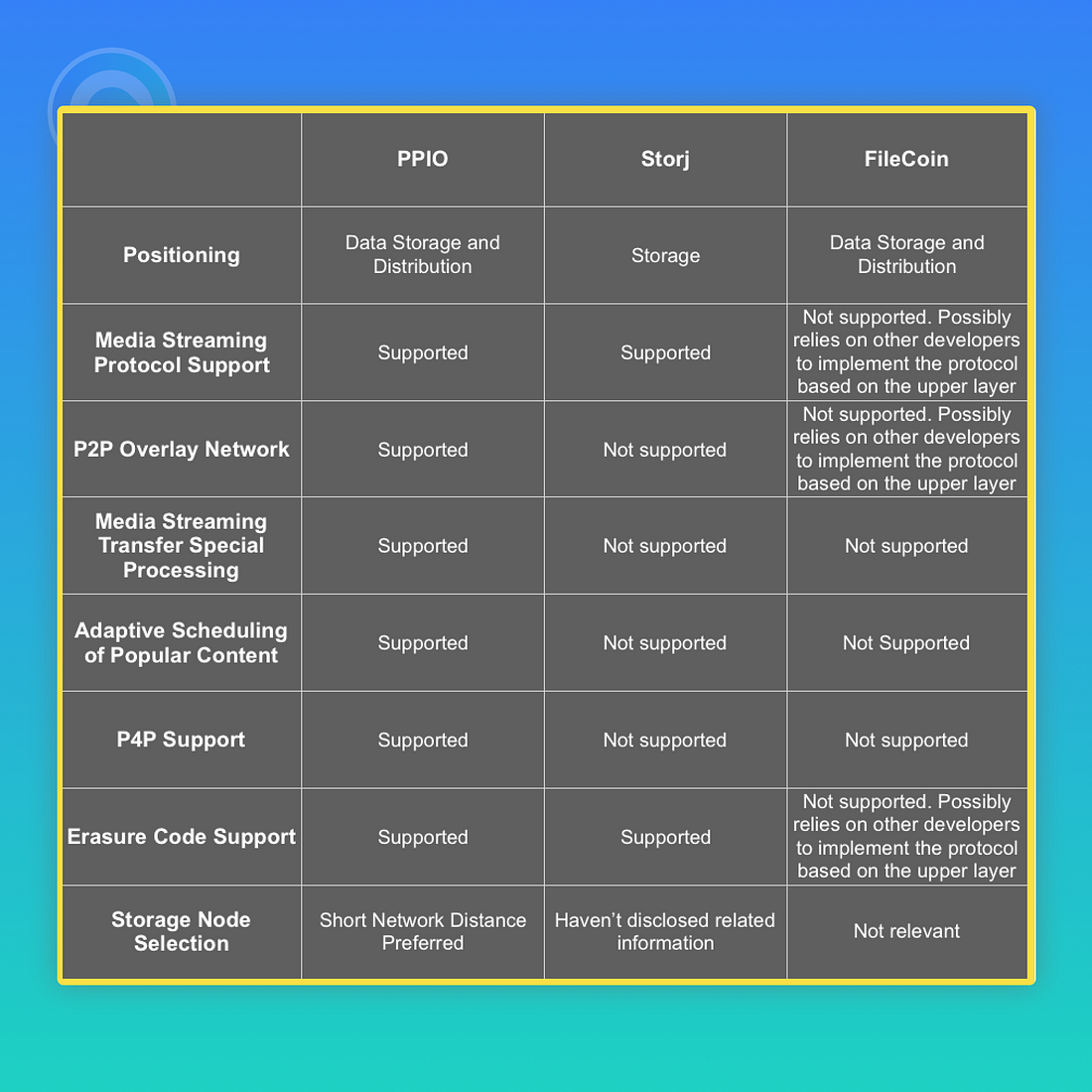

PPIO To Focus More on Data Delivery

Compared to other decentralized storage projects like Filecoin and Storj, PPIO is paying more attention to data delivery. As we covered in our Ultimate Guide To Understanding The Differences Between PPIO, Filecoin, and Storj, these other projects are focusing more on data storage. Here is a simple comparison table to analyze the three storage chains and give comparative information.

In summary, we believe these are the advantages of PPIO in the field of data delivery.

Want more? Join the PPIO community on Discord or follow us on Twitter.